A lot of developers are using version control systems such as SVN (Apache Subversion) and GIT in order to track changes in their source code. These types of server tools are essential for the organizations which have multi-developer projects. Most of these version control systems create internal hidden directories, which typically contain extensive information about the files and directories stored in the current directory. As you might have already guessed, such systems store sensitive and confidential information. However, when the developers are publishing the website files from these systems to the live servers, sometimes they forget to delete, or restrict the access to such directories. This practice can pose a very high security risk that affects the company.

A lot of developers are using version control systems such as SVN (Apache Subversion) and GIT in order to track changes in their source code. These types of server tools are essential for the organizations which have multi-developer projects. Most of these version control systems create internal hidden directories, which typically contain extensive information about the files and directories stored in the current directory. As you might have already guessed, such systems store sensitive and confidential information. However, when the developers are publishing the website files from these systems to the live servers, sometimes they forget to delete, or restrict the access to such directories. This practice can pose a very high security risk that affects the company.

During a web application security scan, Acunetix WVS looks for these types of directories and alerts the user if they are discovered. Acunetix WVS also crawls and parses the contents of these hidden directories and uses the information gathered to reconstruct the site structure and find even more vulnerabilities.

For example, Subversion is using a hidden directory named .svn. Inside this directory there is a file named entries (/.svn/entries). This file contains a lot of sensitive information; all the files and directories present within the current directory, such as the usernames of people who have committed files in this directory, exact file modification dates and more.

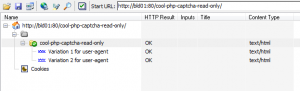

Acunetix WVS reads this file, parses it and sends all the information to its crawler. By recursively parsing all these files, the crawler is able to completely reconstruct the site structure and discover hidden, debug, test files and directories left there by developers. Sometimes developers create SQL database backup files using hard to guess filenames, thinking that it is safe to leave such databases laying around. If the .svn directories are accessible, the crawler will find a list of all these files. As an example, I have downloaded an open source project called “cool-php-captcha”. As seen below, while crawling this web application, the crawler did not discover much because it does not contain an index page, and all the directory listings are disabled.

However, if you scan the same web application using Acunetix WVS, the final site structure will look like this:

As you can see, Acunetix WVS found all these .svn/entry files, parsed them and sent the information to the crawler. The crawler managed to reconstruct the whole website structure; all the files and directories from the repository. Once the site structure is reconstructed, Acunetix WVS will automatically scan all these files for vulnerabilities. Currently, Acunetix WVS can find CVS, SVN, GIT, Bazaar and Mercurial repositories and can parse SVN and GIT repository files. Support for parsing more formats will be added later.

How do you protect your repository from such a vulnerability? When the website source code is rolled out to a live server from a repository, it is supposed to be done as an export rather than as a local working copy. Otherwise you can restrict access to the hidden directories by using an .htaccess entry like the example below:

<DirectoryMatch .*.svn/.*>

Deny From All

</DirectoryMatch>

If you’re not already using Acunetix Web Vulnerability Scanner, you can download the latest trial edition from here.

To stay up to date with the latest web security news, join the Acunetix community by liking the Acunetix Facebook Page. Also, follow us on Twitter and read the Acunetix Blog.

Get the latest content on web security

in your inbox each week.